Troubled Tables (Facebook Expansion Study)

What are the reasons for the errors in Tables A.1, A.2, and A.32 in Social Media and Mental Health?

There are only three tables with descriptive statistics in Social Media and Mental Health and all three of them contain errors. Investigating why this is so reveals further underlying problems.

Trouble with Table A.1

Column 4 of Table A.1 ( Summary Statistics by Facebook Expansion Group) is labeled Fall 2005, which means it should describe all the colleges in the Integrated Postsecondary Education Data System (IPEDS) database not in the first three columns (earlier expansion groups) — and yet the Number of colleges row gives the total as only 204:

Since there are 7 thousand post-secondary institutions in IPEDS, and since any reasonable count of U.S. colleges would number at least in the thousands, the Number of colleges in column 4 is obviously way too low.

Trouble with Table A.32

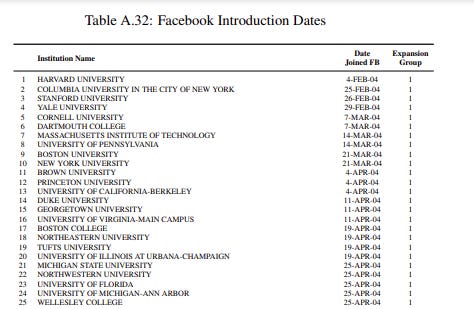

To understand Table A.1 we need to examine Table A.32, titled Facebook Introduction Dates:

The table contains the 775 colleges where Facebook expanded between Spring 04 and Spring 05. There is something strange, however, when we look at the end of the table:

The colleges at the end of the list are marked as Expansion Group 4 even though they gained access to FB in Spring 05. And yet the Note under the table states:

For the set of colleges that appear only in the NCHA dataset, we list the Fall of 2005 as the semester in which Facebook was introduced (expansion group 4).

So even according to the Note under the table it should be the colleges not listed in the table that are classified as Expansion Group 4, contrary to Table A.32 labeling many colleges as Expansion Group 4 .

Obsolete Group Classification

To understand the discrepancy in Table A.32, we need to look at the code that handles Expansion Group numbering in the data preparation file Data_Prep_ACHA_1.do:

**********************************************************

*** Make FB expansion variable commensurate to dataset ***

**********************************************************

* fbexp=3 and fbexp=4 both denote schools that got FB in the spring of 2005 (fbexp=3 got it early in the spring and fbexp=4 got it late in the spring)

* Since the survey is administered once in the fall and once in the spring and since we don't know exactly when in a given semester it is administered, we can bunch fbexp=3 and fbexp=4 together

replace fbexpgrp=3 if fbexpgrp==4

replace fbexpgrp=4 if fbexpgrp==5

* Notice fbexpgrp=4 (formerly fbexpgrp=5) denotes universities that were not in the dataset I sent the ACHA. That's likely because they got access to FB after the last uni in the dataset I sent got access---As we see there were originally FIVE expansion groups where G3 = early S05, G4 = late S05, G5 = F05 expansions. The authors eventually decided to combine G3 & G4 into one G3 Spring 05 expansion group and to rename G5 to G4.

Readers by now likely realize that columns 3 & 4 in Table A.1 correspond to the obsolete early and late Spring 05 classifications, and this can be confirmed by examining the code in the file Tables_Appendix.do where the group numbering is taken from the file IntroDatesACHA.csv that lists only Spring 04 to Spring 05 expansions but uses the obsolete numbering.

Mismatch in College Counts (Table A.1)

A subsequent problem is that the last two rows of Table A.1 are mismatched:

Number of colleges 58 231 263 204

Number of colleges (NCHA subsample) 40 124 120 136The problem is that columns 3 & 4 in the top row count the number of colleges in early and late Spring 05 (respectively) expansions while in the bottom row the number represents colleges in the Spring and Fall 05 (respectively) expansions.

The correct numbers should be:

58 231 467 ???

40 124 120 136where ??? is the 6000+ colleges in IPEDS but not in the Spring 04 to Spring 05 expansion list of 775 colleges (Table A.32).

A Mistaken Conclusion (NCHA fraction)

In Social Media and Mental Health the authors, based on the errors in Table A.1, mistakenly conclude:

Online Appendix Table A.1 also shows the number of colleges in the NCHA dataset that received Facebook access in each semester between the spring of 2004 and the fall of 2005. Other than the spring of 2004, when Facebook was first introduced, the fraction of colleges in the NCHA dataset that received Facebook access in each semester is fairly equally distributed across the remaining introduction semesters.

In reality the fraction is rapidly decreasing: 40/58 = 0.8, 124/231=0.5,, 120/467 = 0.26, 136/6000+ < 0.025; in others words the college samples for NCHA mental health surveys include the majority of expansion colleges in 2004 but very few of the colleges in 2005.

Trouble with Table A.2

There is also a subtle problem with Table A.2:

Here the row

Index Depression Services -0.00 -0.03 -0.02 -0.01should add up to a (weighted1) sum of nearly zero because "The averages are taken in the pre-period; i.e., up to and excluding 2004. All indices are standardized so that, in the pre-period, they have a mean of zero and a standard deviation of one."

Looking at the Stata code in Data_Prep_ACHA/Data_Prep_ACHA_2.do, it turns out the authors standardized pre-Facebook, not pre-2004:

summ eq_index_`k' if T==0

replace eq_index_`k' = (eq_index_`k'-r(mean))/r(sd)Here T==0 means we know the student is not at a college with access to Facebook (T as in Treatment = Facebook access); to standardize up to and excluding 2004 the authors should have used

summ eq_index_`k' if year<2004instead.

Pre-FB vs Pre-2004 Distinction

Pre-2004 students are simply those that took the NCHA survey before 2004. Pre-FB students, however, are those who took the survey at colleges that did not yet have access to Facebook — and that includes many students in 2004 and 2005.

Since the index in Table A.2 was standardized for pre-FB but the averages in the columns are actually for pre-2004, they need not add up to zero. The ‘missing’ students necessary for (weighted) sum of zero are those in 2004 and 2005 in colleges without Facebook access — and for some reason it seems the use of mental health services by these students increased considerably in 2004 and 2005.

Incorrect Terminology (Preperiod)

The authors repeatedly use the term ‘pre-period’ incorrectly within Social Media and Mental Health, e.g. when they write:

Our indices are constructed as follows: first, we orient all variables that compose an index in such a way that higher values always indicate worse mental health outcomes; second, we standardize those variables using means and standard deviations from the preperiod; third, we take an equally weighted average of the index components, excluding from the analysis observations in which any of the components are missing; fourth, we standardize the final index.In reality, they standardize for pre-Facebook, not pre-2004.

Similarly in the following sentence:

The 2 percentage point increase corresponds to a 9 percent increase over the preperiod mean of 25 percent for depression and a 12 percent increase over the preperiod mean of 16 percent for generalized anxiety disorder.The convention in Difference in differences papers is to use the term ‘pre-treatment’ or a more specific term such as, in this case, would be ‘pre-Facebook’.

Discussion

Although some of the errors are difficult to spot (such as the one in Table A.2 concerning standardization), others were missed despite being obvious (the number of colleges in column 4 of Table A.1 is a magnitude lower than it should be) — the lesson being that authors can easily develop blind spots2 and that editors and reviewers need not catch severe errors in a paper so large.

The fact that there are errors in all the three tables with descriptive statistics in Social Media and Mental Health raises the question of the accuracy of the various regression results in the study. Unfortunately, it is far more difficult to spot a potential error in ‘cooked’ data, that is in optimal solutions to often complicated equations implemented in proprietary software (such as Stata in this case).

Given that the authors omit nearly all the relevant descriptive statistics, such as mental health trends as measured by the NCHA (see Facebook Expansion: Invisible Impacts?), as well as intermediate results (all files were deleted from the Intermediate folder of the Replication Package prior to its publication), they allow little opportunity for readers to spot any inconsistencies. This is a serious problem given that the study is de facto unverifiable due to the crucial NCHA data set not being public.

Conclusion

There are errors in each of the three tables with descriptive statics in Social Media and Mental Health due to discrepancies with the underlying code. There is also confusion throughout the paper between pre-period (pre-2004) and pre-treatment (pre-Facebook).

For those of us who are not experts in the mental health impact of social media, could you please offer some implications of the problems with the authors' study?