Some Notes on Two 'Community Notes'

Of the two Community Notes that appeared under Haidt’s 'multi-week SM experiments' tweet, the first was outright misinformation while the second is at best misleading.

On August 29, Zach Rausch and Jonathan Haidt posted on Substack a partial critique of Chris Ferguson’s meta-analytic review of experiments measuring how social media (SM) use impacts various aspects of mental well-being. This critique was largely based on my own critique, Fundamental Flaws in Meta-Analytical Review of Social Media Experiments, initially written in April.

The primary conclusion declared by Ferguson was that, methodological issues aside, experimental studies actually undermine “causal claims by some scholars (e.g., Haidt, 2020; Twenge, 2020) that reductions in social media time would improve adolescent mental health.” In addition, Ferguson made various public announcements that presented his review as showing that SM reduction has no effect on mental health (such as in a tweet declaring that “reducing social media time has NO impact on mental health”).

The initial critique by Rausch and Haidt showed that Ferguson ignored the role of duration and used very short abstinence experiments that produced declines in momentary mood akin to withdrawal symptoms (thus confirming theories of Haidt and Twenge about psychological dependence on SM) to counteract evidence from longer SM use reduction experiments that produced beneficial impacts on mental health.

Imagine a researcher concluding that heroin abstinence has no beneficial effects because he ‘subtracts’ momentary withdrawal symptoms manifest during the initial stages of abstinence from health improvements that emerge at later stages.

Besides this criticism, Haidt also posted the following tweet:

First Community Note

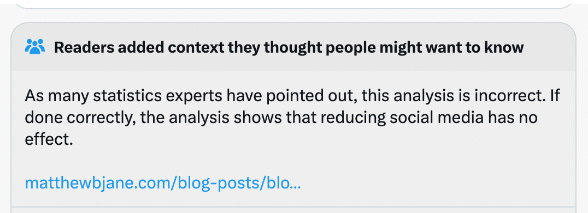

Some time after the tweet by Haidt, the following Community Note was attached to the tweet:

The link right under the Note was a blog article by Matthew B. Jané.

The assertion in the Community Note that a correct analysis “shows that reducing social media has no effect” is nearly identical to various public assertions by Ferguson about his own analysis.

Comments on the First Community Note

I do not know of a single statistics expert who has declared that correct analysis “shows that reducing social media has no effect.” Would any supporters of this Community Note please list at least 5 of the ‘many statistics experts’ who have expressed this view prior to the display of this Community Note?

For those who think that Matthew B. Jané agrees with this Community Note: please read what Jané actually wrote.

Jané in fact says the very opposite in his critique when he deems Ferguson to have committed “a common misinterpretation of the estimate in a random effects meta-analysis” and asserts that even if the estimate was outright zero, it would not preclude the possibility that some SM use reductions may have “genuine true effects that are very positive (i.e., social media use is detrimental).”

Jané also confirmed explicitly that he disagrees with the Community Note when he wrote, in a follow up article on his blog, that “the second sentence is not true and I did not state that in the original post” (emphasis in the original) – here he refers to the second sentence of the Community Note “If done correctly, the analysis shows that reducing social media has no effect.”

To summarize, the assertion that there are many ’statistics experts’ who concluded a correct analysis “shows that reducing social media has no effect” appears to be a fabrication while the sole source provided by the Note states the very opposite of this conclusion. In view of this, the Community Note amounts to a case of disinformation.

Second Community Note

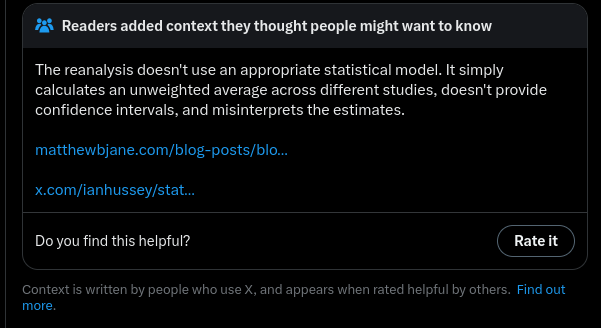

The first Community Note disappeared, but was soon replaced by a second note:

This Note does not really say much more than that Part 1 of the critique by Rausch and Haidt is not a meta-analysis.

Comments on the First Community Note

Part 1 of course is not a meta-analysis – it is not even a full critique. After all, towards the end of Part 1, Rausch and Haidt announce that Part 2 will reveal errors in study selection as well as in effect sizes and sample sizes. Part 1 is mainly a demonstration, for general audiences, that Ferguson used very short abstinence experiments to attenuate evidence of beneficial impacts in longer SM time reduction experiments.

The Community Note is also a misunderstanding of the critique, since the plain averages in Part 1 are not ‘estimates’ of anything, they are what they are: precise averages. The accusation that Rausch and Haidt misinterpret ‘the estimates’ makes no sense since the plain averages are not estimates (which is why they have no confidence intervals).

This use of plain averages goes back to my initial critique of Ferguson. I had good reasons for it:

My criticism was that Ferguson neglected basic requirements of systematic reviews, and one example was that even a quick look at the studies separated by duration would immediately reveal there’s a relationship with Ferguson’s effect sizes – something that any serious analysis would have to investigate.

Since I declared Ferguson’s meta-analytic design to be fundamentally flawed, why would I then use this very flawed design to argue anything about reality beyond critiquing the flawed model itself?

Even if I wished to use Ferguson’s model, at the time I did not have sufficient information to reproduce it – that was made possible only with additional information obtained by Rausch from Ferguson later on.

This is not rocket science: the crucial issue is that all the very short abstention experiments have substantially negative effect sizes (per Ferguson) while the great majority of the longer SM use reduction experiments have substantially positive effect sizes.

Furthermore, it makes no difference if one uses plain averages or Ferguson’s weighted averages (along with confidence intervals). Once I had sufficient information to reproduce Ferguson’s model, I showed this for my critique in Social Media Experiments and Weighted Averages.

Rausch and Haidt themselves edited Part 1 to use weighted averages along with confidence intervals and, of course, they too found that it did not change the results on which their critique relied. In other words, the Community Note’s suggestion that the use of weighted averages would undermine the critique of Ferguson was not true.

Models and Reality

My criticism of the Community Notes does not mean that I think Rausch and Haidt have done everything perfectly well. In my initial critique, I made it repeatedly clear that I consider Ferguson's meta-analytic design to be fundamentally flawed, especially in its use of disparate composite effects. This was not made crystal clear, however, in Part 1 of the Rausch and Haidt critique (the updated version is better in this regard). Some readers therefore might have been under the impression that one can use Ferguson’s effect sizes to formulate a compelling argument towards causality. My view is that Ferguson’s effect sizes are so flawed in design that any argument based on them is inherently bound to be weak.

Furthermore, to make a compelling argument toward causality, one has to address methodological issues of the experiments themselves, such as the possibility that they are producing biased results due to, for example, demand characteristics or placebo effects.

Allow me to also note that if one wished to make the strongest case for causality within Ferguson’s model, one should look at experiments that included a measure of depression or anxiety, the primary focus of Haidt and Twenge in their theories of social media impacts on mental health. This group of 11 studies produces (within Ferguson’s model) the effect estimate of d = +0.22 (+0.10, +0.39) even before any corrections of errors in the data – while with corrections we get d = 0.26 (see Social Media Experiments and Weighted Averages for details). It is the presence or absence of a mental health disorder outcome within the composite effect size that is the strongest moderator that I have found.

Note: The 16 experiments without a MH (depression or anxiety) outcome have a negative estimate of d = -0.03 (within Ferguson’s model). If we request the Jamovi model specification that Ferguson utilized to provide a moderator analysis on the MH/WB split, we get MH Moderator: d = 0.27 (+0.08, +0.46), p = 0.005 results.

Inconvenient Evidence

One additional concern I have with the second Community Note is that it appears to rebuke Haidt for merely noting that multi-week experiments tend to produce evidence of beneficial impacts. Haidt is simply countering accusations that there is no evidence of causality what-so-ever, and the Community Note seems to imply that even if a hundred multi-week experiments all produced results indicating substantial benefit, one is somehow forbidden to point this out as evidence of causality.

Note that neither of the two sources listed in the second Community Note are actually reactions to this tweet! Instead, both sources are reactions to the Part 1 critique, but the Community Note makes it appear as if both sources agree that it is wrong to tweet the results of the multi-week experiments in response to accusations that there is no evidence at all.

The idea that evidence below some arbitrary evidentiary threshold amounts to no evidence is a favorite tool of those who seek to discredit inconvenient evidence, and unfortunately the positioning of the Community Note under this particular tweet by Haidt serves this agenda very well, be it deliberately or by accident.

Insert a cartoon in the style of The Far Side: Scientist A: There’s a 94% chance the large meteorite will hit Earth! Scientist B: What a relief! That’s below the 95% evidentiary threshold, and therefore there is NO EVIDENCE of danger!

Simplicity versus Complexity

There’s another, more subtle concern that I have about the second Community Note: namely that it purveys the view that simple methods are somehow inherently inferior compared to more advanced and complex methods. In particular, the Community Note and the two sources it links seem to imply that one must conduct a ‘random-effects’ model analysis before one is allowed to even say anything regarding the evidence of causality in SM experiments.

I suspect those with this view are not aware that the widespread use of ‘random-effects’ models is a lot more controversial and contested matter than they might realize (see here and here and here). That is doubly a problem when you have design flaws such as Ferguson’s composite effect size.

This is one reason I’m not thrilled about Rausch and Haidt replacing the averages in Part 1 with weighted averages and confidence intervals. I intentionally avoided this in my critique of Ferguson in order to avoid implying that there’s any validity to Ferguson’s model and, furthermore, in order to avoid any conflation of my criticism of Ferguson’s methodological negligence with an argument that there is causation.

Not everything that shines is gold – and that applies to statistics too. The use of confidence intervals and ‘random-effects’ models does not make an argument magically more rigorous and accurate.

The Misuse of Community Notes

Finally, I have a concern about the exploitation of Community Notes. The first Community Note was disinformation that misused and misrepresented the blog post from M. B. Jané.

The second Community Note is less objectionable but it is still at best misleading, especially in the way it seems to be a condemnation of the mere display of multi-week experimental results in a tweet that seeks to counter accusations of there being no evidence at all.

Furthermore, even if one agreed with its criticism of Part 1, it is the kind of a judgment that could be made about gazillions of tweets related to social science topics.

In particular, many of Ferguson’s tweets in the past would have to be slapped with much harsher Community Notes, especially those where he has made announcements such as that his review showed that “reducing social media time has NO impact on mental health” – something that is factually untrue.

Curiously, Ferguson recently deleted not only this particular tweet but also nearly every other tweet that he has made prior to September.

Note also that M. B. Jané actually agrees with much of my criticism of Ferguson: he criticizes the ‘composite’ effect size design by Ferguson, he classifies Ferguson’s interpretation of the results as a common misconception, and he dismisses Ferguson’s employment of a SESOI (smallest effect size of interest) as arbitrary and unfounded. Jané even goes beyond the scope of my critique in labeling Ferguson's assertion that almost all meta-analyses are statistically significant as “just not true.”

Given that this is a critique of a paper published in a respectable peer-reviewed psychology journal, and one that has been quickly utilized by scholars such as Candice Odgers and Tobias Dienlin to chastise Haidt, Jané’s critique of Ferguson seems far more troubling and consequential than his objections to the first part in a series of Substack posts written for general audiences by Rausch and Haidt.

And yet it is Haidt, not Ferguson, who ends up the focus of a social media storm.

As I understand it, the mechanism behind Community Notes is that they require agreement from both sides on a certain left-right political spectrum. Haidt’s criticism of tech is opposed by many progressives who think it diverts attention from their favorite causes while it is also disliked by those libertarians who fear it will lead to infringements on freedoms – and that makes Haidt particularly vulnerable to the issuance of Community Notes.

Conclusion

Let’s hope that Community Notes will be attached more fairly and accurately in the future to tweets dealing with SM and MH controversies than is currently the case.