Haidt: Social Media in O&P Paper

Haidt does not fully understand the primary flaws of a 2019 paper by Orben & Przybylski and misinforms readers about its contents when attempting to use it to bolster his social media arguments.

Haidt devotes considerable time in Social Media is a Major Cause of the Mental Illness Epidemic in Teen Girls to discussions of a 2019 paper in Nature Human Behavior (NHB) by Orben & Przybylski (O&P) titled The association between adolescent well-being and digital technology use. Unfortunately Haidt ends up severely misleading readers.

The O&P Paper

O&P proclaimed that their analysis of three large data sets (YRBS, MtF, MCS) revealed the effects of technology on adolescent well-being to be “so minimal that they hold little practical value” — and similar to the effects of ‘neutral’ factors such as asthma or eating potatoes or wearing glasses.

Haidt correctly states that the O&P paper, especially its comparisons with eating potatoes or wearing glasses, has had an enormous influence on journalists and therefore on the public.

Unfortunately Haidt is not accurate in his descriptions of the O&P paper and even ends up severely misinforming his readers about some of its contents.

Haidt on O&P

Haidt writes that O&P used “average regression coefficient” when in reality these were median coefficients; he writes O&P were “using screen time use to predict positive mental health” but in fact some of the coefficients did not involve time at all (e.g. computer ownership) and none used time per se (they used rankings of time categories) — and the predicted outcome was not mental health but vague ‘well-being’ (actually a crucial distinction).

Haidt then makes a strange statement about a Twenge et alia critique of O&P:

Twenge and I argued in a published response paper in the same journal that Orben and Przybylski made 6 analytical choices, each one defensible, that collectively ended up reducing the statistical relationship and obscuring a more substantial association.

The 6 extreme flaws listed in the Twenge et alia paper are not defensible under any circumstances. The notion that these methodological misdeeds were somehow bad only when used together makes no sense — it is puzzling why Haidt, who was one of the critique’s four authors, would say that.

It is also important to note here that while the Twenge critique is — sans a few technical quirks — entirely valid and utterly devastating, it is nowhere close to being exhaustive: there are many, many, many other severe problems with the O&P paper.

One fundamental flaw missed by Twenge et alia is that O&P are using Spearman’s rank correlations, which immediately invalidates any comparisons, those with potatoes and glasses included.

Also, while Twenge et alia rightfully complained about O&P loading controls with every imaginable mediator they could find — one of the oldest tricks in the book to attenuate inconvenient associations — they missed the fact that O&P failed to include both sex (or gender) and age (or grade) in their controls!

The failure by O&P to include gender among their controls is particularly astonishing, given that girls have roughly double the risks of mood disorders and relevant symptoms. Such omission alone makes the paper appear to be a parody, especially since O&P boast that their work “underlines the importance of considering high-quality control variables” (see the Discussion section of the O&P paper).

There are many, many other flaws in the O&P paper, but this is not the place and time to explore them.

Social Media in O&P

Inexplicably, Haidt decided to imply that O&P assertions about minimal effects do not apply to their findings about social media.

This is completely false.

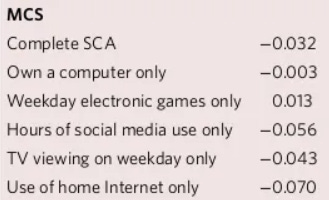

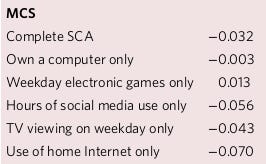

The O&P coefficient for “hours of social media use” in MCS is -.056 (Table 2); that is precisely the kind of result misinterpreted by O&P to mean that the effect of social media use is so minuscule as to be practically zero (after all it is even weaker than the -.071 result for digital tech use in YRBS).

Haidt also conveniently forgets all about the glasses comparison.

The O&P coefficient for wearing glasses in MCS is -.061 — stronger than the -.056 coefficient for social media.

Social Media versus Digital Media in O&P

Haidt then goes so far as to imply that the O&P coefficient for social media was much stronger than the O&P coefficients for other types of digital media:

In their own published report, when you zoom in on “social media,” the relationship is between 2 and 6 times larger than for “digital media.”

No.

The only data set with measures of social media and most other screen time components was MCS:

Here the O&P coefficients were -.070 for Internet, -.056 for social media, -.043 for TV, and +.013 for games (but see O&P Coefficient for Games in Notes).

O&P also calculated a “tech” coefficient (-.042) in MCS, which was used for comparisons with other factors in Table 3 of their paper and (incorrectly) equated with screen time.

Obviously the magnitude .056 (social media) is below .070 (Internet) and nowhere close to twice as large as .043 (TV) or .043 (tech).

Discussion

Haidt in essence legitimizes the awful misuse of statistics by O&P that he criticized as one of the authors of Underestimating digital media harm. Given the methodological flaws of the O&P paper, none of the concocted ‘results’ within it have any scientific merit whatsoever. Haidt should not be undermining this reality in his desire to convince readers that social media access is an exceptionally harmful utilization of screens for adolescents.

Conclusion

Haidt’s attempt to use the O&P paper as evidence that social media is potentially more harmful than other types of digital screen use contains misinformation and relies on O&P’s misuse of statistics.

Notes:

O&P Coefficient for Games

Boys play video games much more than girls, and girls have roughly double the risk of mood disorders as boys, so no doubt the magnitude of the O&P coefficient is positive due to these two facts.

Correction Aug 27: I mistakenly wrote “moderator” instead of “mediator”.

A mediator is an intermediate factor in a causation path, such as “heavy digital tech use” —> “sleep deficiency” —> “depression” — so here part of the effect of digital tech use on depression is indirect via sleep deficiency.

Examples of potential mediators used by O&P are negative attitudes towards school, time spent with parents, parent distress and closeness to parents (see Twenge 2020), all of which could be influenced by heavy digital tech use and, in turn, influence various aspects of well-being.

Including mediators in a regression can greatly decrease correlation strength even when there is indeed a strong effect of X on Y. In a sense you are subtracting indirect effects — taken to extreme, if you include lung cancer as a control variable between smoking and mortality, you are making sure that lung cancer deaths caused by smoking are not counted when assessing the risks of smoking!

David, thanks as always for your extremely careful analyses of my writing.

In this case, it seems that your primary critique of me is that I was not critical enough of O&P. I did not want to accuse them of any kind of misconduct. I'm sure they can offer a defense of each of the 6 analytical moves (although I think the inclusion of emotional responses as moderators is probably not defensible). In any case, while you have caught some errors in my writing, such as not seeing that they used median coefficients rather than averages, I think you agree with me on the main conclusion, which is that their demonstration of essentially zero relationship is not valid. If we agree on that, then I do not think that what I wrote was "severely misleading" to readers. What do you think?